JIM REDMAN, President, Ergotech

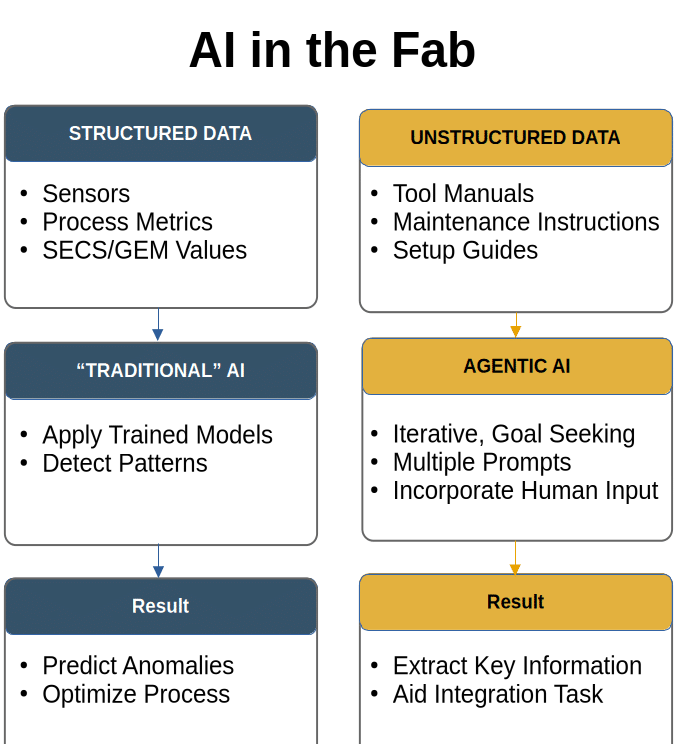

Most fabs are already using AI in some form – from spotting anomalies to fine-tuning process steps using mountains of equipment data. In that sense, AI isn’t new to the industry. Agentic AI is gaining traction as a new approach for harnessing AI in more autonomous and goal-directed ways

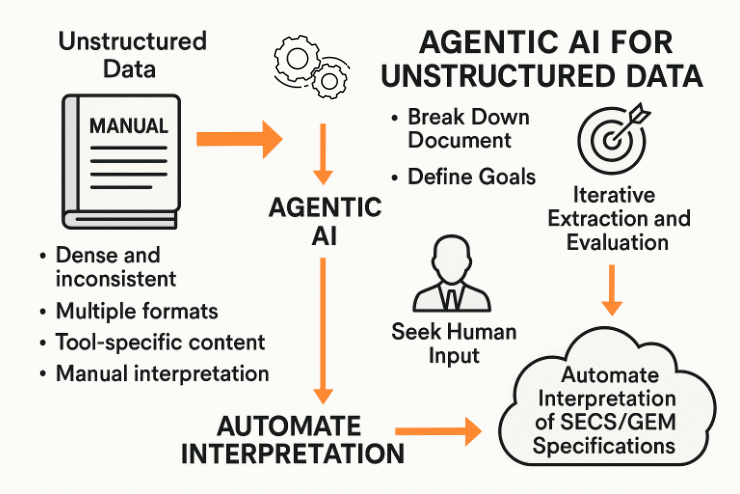

Despite the fancy name, it isn’t a brand-new type of intelligence. It’s a smarter way of using the tools we already have, particularly large language models (LLMs) like ChatGPT. Instead of giving an AI a single question and hoping for the right answer, it takes a goal and works toward it step by step (FIGURE 1). It tries different strategies, adapts when things don’t work, and even asks a human for help if needed. It operates more dynamically creating more of a decision tree and asking questions based on previous responses – testing, learning, and adjusting as needed.

Structured data

Currently, most application of AI in fabs is to analyze structured data: things like tool sensors, SECS/GEM values, and process metrics. In that domain, most of the analysis is performed by what we might consider “classical” AI – Machine Learning (ML) and Deep Learning (DL) approaches based on neural networks that require training or provide unsupervised classifications.

That will continue to be important, and in fact, it’s an area where agentic AI can bring added value. One of the emerging capabilities is using large language models (LLMs) in an agentic fashion to assist in structured data analysis. LLMs can act as intelligent orchestrators: not just analyzing data directly through classification or summarization, but also suggesting the most appropriate methods for analysis, based on the dataset and problem context. This means that instead of manually selecting a neural network model or preprocessing pipeline, engineers could describe the data and the goal, and let the LLM propose and even script out viable approaches using classical ML/DL models. This goal of having AI model create other AI models, rather than delivering the result directly is a rapidly evolving field, for example this work by Google’s DeepMind (https://arstechnica.com/ai/2025/05/google-deepmind-creates-super-advanced-ai-that-can-invent-new-algorithms/) aimed at improving accuracy by evaluating multiple, generated models.

This introduces a new level of agility into structured data analysis. In this agentic role, the LLM doesn’t replace classical AI but enhances it – guiding it with contextual awareness and flexibility. This is where neurosymbolic AI also comes into play. Neurosymbolic AI blends neural learning (like deep learning) with symbolic reasoning (like logic or rule-based systems). It allows systems to not only learn from data but also reason about it. Used in tandem with LLMs, neurosymbolic approaches can help build AI systems that are both data-driven and logically coherent – bridging the gap between pattern recognition and structured decision-making (FIGURE 2).

Reading documents

Agentic AI opens the door to something new: making sense of unstructured data. Providing relevant and accurate information from the dense, inconsistent, and often confusing tool manuals, maintenance instructions, and setup guides that engineers rely on every day. These documents have traditionally required a human to read and interpret them, which slows everything down – especially when onboarding new tools.

The traditional challenge with data of this type is that there is no consistent pattern. Vendors deliver manuals in different formats and the content is, necessarily, tool-specific. Understanding often needs a domain expert.

While large language models (LLMs) are particularly well-suited to interpreting unstructured text, their effectiveness is often limited when used with a single prompt. Without additional context or guidance, they may miss key details or misinterpret ambiguous content – especially in technical domains like semiconductor manufacturing. A common issue with LLMs working on unstructured data stems from trying to extract too much information in a single, overly broad prompt. This often leads to vague or hallucinated responses, where the model fills in gaps with plausible-sounding but inaccurate information. Reasoning LLMs with attribution maps have improved transparency and now shed more light on their inner workings, yet hallucinations and blind spots remain. One way to improve their performance is through Retrieval-Augmented Generation (RAG), a technique that supplements the LLM’s responses by pulling in relevant information from a connected knowledge base. RAG helps the AI ground its answers in specific documentation rather than relying solely on general knowledge, however, for this to work well the system needs access to curated, domain-specific documents that accurately contain the answers to likely queries. Even after providing additional training material LLMs can deliver factually incorrect information if they misunderstand the context.

Enter agentic AI

Agentic AI helps mitigate these potential errors by breaking the task into smaller, more specific prompts that are guided by the responses to previous questions. This step-by-step, context-aware process not only improves accuracy but also helps identify uncertainties and can allow human verification or other input. By allowing the AI to operate iteratively and incorporate feedback, agentic AI reduces the likelihood of errors and hallucinations – leading to results that are both more relevant and more reliable.

SECS/GEM: Where standards meet real-world chaos

As a practical example of how agentic AI can help, we’ll explore a system that uses this approach to automate the reading and interpretation of SECS/GEM specifications, which define how semiconductor tools talk to factory software. Along the way, we’ll touch on the benefits, challenges, and bigger picture: what this kind of AI might mean for the future of fab automation.

Click here to read the full article in Semiconductor Digest.